Tejan, M. Part I: Misinformation in public health Emergencies. HPHR. 2021; 31.

DOI:10.54111/0001/EE9

Health misinformationis a health-related claim of fact that is currently false due to lack of scientific evidence. Misinformation frequently impacts health behaviors, particularly during Public Health Emergencies of International Concern (PHEICs). With the ongoing COVID-19 pandemic, misinformation will continue to impact adherence to public health measures such as mask wearing and vaccine hesitancy.

Health misinformation is a health-related claim of fact that is currently false due to lack of scientific evidence [1]. Misinformation implies false and inaccurate information being deliberately created, but either intentionally or unintentionally shared. Terms associated with misinformation [See Figure 1] include disinformation, fake news, rumors, urban legend, spam, and troll. In contrast, disinformation is inaccurate information that is intentionally deceptive. Misinformation and disinformation both refer to inaccurate information, but the distinction is in the creator’s intention. Fake news is false information in the form of news, which is separate from disinformation because it might be unintentionally shared by innocent users. A rumor is unverified information that can be true or false. Urban legend is intentionally spread misinformation specific to local events usually for entertainment purposes. Spam is irrelevant information that is sent to a mass number of users. Trolling is a type of misinformation aimed at causing disruption and argument among a certain group of people. For the purposes of this paper, misinformation will be used as an umbrella term for all of the above phenomena because they are all considered inaccurate information with different sources and levels of intentionality.[2]

Misinformation can be divided into two categories: unintentionally spread and intentionally spread. Unintentionally spread misinformation refers to misinformation that is unintentional in its deception of recipients. Typically, this kind of misinformation spreads because of users trust in the information source such as family, friends, colleagues, or influential users on social media. Intentionally spread misinformation is typically made by creators and coordinated groups who have a clear goal of sowing discord and manufacturing chaos. [3]

Despite its present-day association with social media, misinformation is not a new phenomenon. Individuals have always received health information outside of healthcare providers. For example, between 1921 and 1974, Listerine had to issue corrective advertising because they claimed their mouthwash could cure colds and sore throats [iv]. Corporations, such as Listerine, governments, and individuals can be sources of misleading or deceptive health information. [4]

According to Article 1 of the International Health Regulations (IHR) adopted by the World Health Assembly (WHA) in 2005, a Public Health Emergency of International Concern (PHEIC) is an extraordinary event, which is determined:

(i) to constitute a public health risk to other States through the international spread of disease

(ii) to potentially require a coordinated international response [5]

Under the IHR, member states have a legal obligation to respond to a PHEIC declaration.

Misinformation has a long history in public health emergencies. A 2009 letter printed in Athens Newsmentioned that thimerosal, a preservative used in some of the H1N1 vaccines, caused autism, a theory that had been refuted by the time the Athens Newsletter was published. The same article also mentioned that squalene, another substance used in the H1N1 vaccine, caused Gulf War Syndrome, another claim that had been rejected by medical experts. [6] Misinformation examples during the 2014 Ebola outbreak in West Africa include the belief that Ebola was not real, Ebola was a politically-motivated disease spread by non-governmental organizations and health personnel, and body parts were being harvested in the Ebola isolation units. [7 ] This misinformation led to hostility towards healthcare workers, which naturally impacted epidemic response. [8] Managing and dispelling misinformation as well as mitigating fear associated with PHEICs are essential functions of emergency preparedness and response. [9]

A uniquely modern-day concern in the age of social media is the speed with which misinformation can spread and the number of people who can be reached. It takes only a retweet or share for thousands of people to view a piece of information that may or may not be accurate. [10]Some estimates suggest that over two-thirds of U.S. adults read news from social media with 20% doing so frequently. According to a 2020 Reuters Institute survey, 36% of respondents from 12 countries use Facebook for weekly news while 21% use YouTube for weekly news. [11]Up to 80% of people seek health information online, thereby putting themselves in potentially vulnerable situations for misinformation. [12] The widespread use of social media as a news source puts an immense amount of pressure on ensuring the validity of health claims so that readers receive accurate information. [13]

A 2018 study by MIT analyzing Twitter found that misinformation spread significantly faster, farther, deeper, and more broadly than true information. According to the authors, it took truth about six times as long as misinformation to reach 1500 people and it took 20 times as long as misinformation to reach a cascade depth of 10, meaning the number of retweets in a single, unbroken chain. Misinformation was more novel than true information, suggesting that people are more likely to share new information. [14] This study highlights the fact that social media users are more inclined to spread sensationalized information rather than verified truth, and therefore that misinformation spreads significantly faster than truth.

According to a recent study on COVID-19 misinformation, the strongest predictors for belief in misinformation are psychological predisposition to reject expert information and accounts of major events, a psychological predisposition to view major events as the product of conspiracy theories, and partisan and ideological motivations. [15] Certain misinformation ties are closely linked with political beliefs. For example, support for U.S. President Donald Trump is strongly related to the belief that COVID-19 has been exaggerated. Belief that COVID-19 was spread purposefully is slightly higher in self-identified conservatives than liberals. [16] The concern with these beliefs are the impacts they have on behavior change.

According to Dr. Leesa Lin, Social and Behavioral Epidemiologist at the London School of Hygiene and Tropical Medicine (LSHTM), the answer to why misinformation spreads faster than truth lies in human coping mechanisms. [16] Human beings look for ways to cope with situations beyond their control. When novel disease outbreaks are in their early stages and so much is unknown, a natural gap in knowledge exists and misinformation fills those knowledge gaps. Further, disaster and emergency settings are breeding grounds for fear and stress. In the midst of these emotions, individuals can be driven to act based on potentially harmful combinations of fear and incomplete information.

When misinformation is present, there is a higher likelihood that the public will incorrectly interpret disaster and emergency warnings and behave in a manner contrary to their best interests. Based on the Knowledge Attitude Behavior Model, human behavior is driven by information reception. According to this model, individuals must actively receive information in order to gradually develop healthy beliefs and attitudes that are then reinforced with the adoption of healthy behaviors.[17] However according to Dr. Lin, this is an oversimplified theory because human beings are not as rational as researchers might have previously assumed. [18]

According to the Emergency Decision-Making Framework, people suffer declines in cognitive functioning as a result of the anxiety caused by a stressful situation. There are five basic patters of coping: vigilance, unconflicted inertia, unconflicted change to a new course of action, defensive avoidance, hypervigilance. When a person is exposed to disaster warnings, they immediately begin to estimate:

Therefore, under this framework, human decision making is altered in emergency settings. Individuals take into account the trustworthiness of the information sources, which is often why public health experts look to community leaders to disseminate information in times of emergency.

According to Dr. Lin, public health officials cannot put an end to all misinformation, but what the public health sector canfocus on is creating mechanisms for the public to use widely accepted sources of trust information. These trusted information sources can be competent government officials such as Dr. Anthony Fauci, Director of the National Institute of Allergy and Infectious Diseases, or other community leaders, who have been educated on public health practices, who can be a centralized source to disseminate evidence-based data in a transparent manner. [20]

Therefore, misinformation should be constantly monitored, particularly in PHEICs. In fact, the LSHTM is working on a tool to monitor social media misinformation sources, identifying hotspots and tracking the spread. This tool, which they intend to call a dashboard, will be used similarly to COVID-19 disease trackers, tracking disease misinformation as closely as the disease itself. Of course, such a tool does not give a holistic understanding of why misinformation starts to spread, but the dashboard could be essential in testing if risk communications are effective and in helping high-risk communities combat misinformation. [21]

In February 2020, Director General of the World Health Organization, Tedros Adhanom, stated, “…We’re not just fighting an epidemic; we’re fighting an infodemic. Fake news spreads faster and more easily than this virus and is just as dangerous. That’s why we’re also working with search and media companies like Facebook, Google, Pinterest, Tencent, Twitter, TikTok, YouTube and others to counter the spread of rumors and misinformation.” [22]

The term infodemic, first used in 2003 in relation to the SARS epidemic, is the rapid proliferation of information that is often false or uncertain. The international community has widely acknowledged the concern of the COVID-19 infodemic and the importance of working with social media platforms to stop it. Many platforms added labels or completely removed misinformation posts. For example, U.S President Donald Trump tweeted a statement that claimed COVID-19 was less lethal than the seasonal flu, a statement not rooted in scientific evidence. Twitter hid the post and added a warning stating the past was “spreading misleading and potentially harmful information” and Facebook deleted the post completely [see Figure 3]. [23]

However, there are concerns about the impacts of this censorship. Censorship on these platforms is not a new concept as posts have been routinely removed if they fail to adhere to content guidelines. Content deemed as “hate speech”, “glorification of violence”, or “harmful or dangerous content” are typically removed. These labels are intentionally broad and vague and leave room for interpretation. To some, censorship on these sites seems to violate one of their core and founding principles of freedom of speech and freedom of expression. Researchers have called to separate medical and health-related censorship from all other forms of censorship. This is because health information can be objectively judged based on scientific evidence instead of subjective opinion or speculation. [24]

In March 2020, tech companies like Facebook, Twitter, and YouTube issued a joint statement stating that they were “jointly combatting fraud and misinformation about the virus, elevating authoritative content on their platforms.”[25] They began utilizing education popups linking information to the WHO, introducing warning labels to false or misleading information, and removing content contrary to health authorities’ recommendations. Conflict arises however in selecting who chooses what is deemed false or harmful information. Social media companies could follow the guidelines of leading health authorities, but they too make mistakes. In emergency settings or with a novel disease, there are disagreements among health authorities on best practices. [26] Further, even health authorities are impacted by political pressures or can be slow to update based practices. These conflicts have led some to decide that censorship is too great a responsibility with too many risks to individual freedoms. Some research has instead recommended education and awareness raising during schooling as a potential solution to health misinformation.[27]

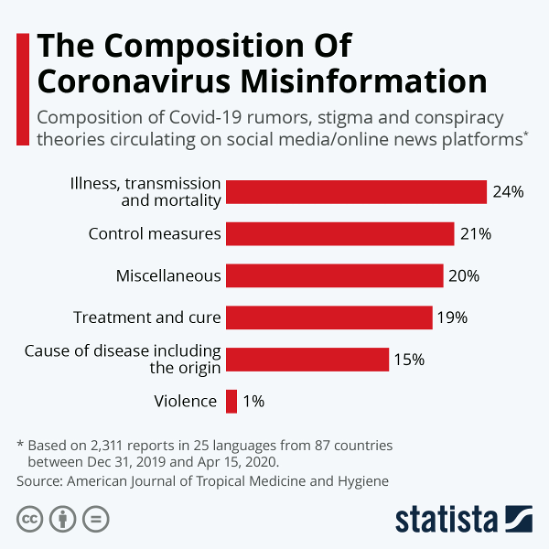

In a study of the composition of coronavirus misinformation, researchers found that 24% of COVID-19 misinformation related to illness, transmission, and mortality, 21% related to control measures, 19% related to treatment and cure, and 15% related to the cause disease including origin [See Figure 2][29].

Examples of COVID-19 related misinformation that have circulated on social media include: [30]

o “Novel coronavirus is in the cloud” | o “Mobile phones can transmit coronavirus” |

o “Drinking bleach may kill the virus” | o “Vitamin D can prevent coronavirus infection” |

o “COVID-19 outbreak was planned” | o “The virus is an attempt to wage an economic war on China” |

o “This outbreak is a population control scheme” | o “Biological weapon manufactured by CIA” |

[1] Chou, Wen-Ying Sylvia, Oh, April, & Klein, William M. P. (2018). Addressing Health-Related Misinformation on Social Media. JAMA : The Journal of the American Medical Association, 320(23), 2417-2418.

[2]Wu, Liang, Morstatter, Fred, Carley, Kathleen, & Liu, Huan. (2019). Misinformation in Social Media. SIGKDD Explorations, 21(2), 80-90.

[3]Wu, Liang, Morstatter, Fred, Carley, Kathleen, & Liu, Huan. (2019). Misinformation in Social Media. SIGKDD Explorations, 21(2), 80-90.

[4]Swire-Thompson, Briony, & Lazer, David. (2020). Public Health and Online Misinformation: Challenges and Recommendations. Annual Review of Public Health, 41(1), 433-451.

[5]IHR Procedures concerning public health emergencies of international concern (PHEIC). (2017, October 04). Retrieved December 16, 2020, from http://www.who.int/ihr/procedures/pheic/en/

[6]Blenner, A. (2009). LETTER PASSED OFF MISINFORMATION ABOUT H1N1 (SWINE FLU) VACCINE. The Athens News (Athens, Ohio), pp. The Athens news (Athens, Ohio), 2009-11-02.

[7]Ebola Myths and Facts: 9 Deadly Misconceptions. (n.d.). Retrieved December 16, 2020, from https://www.concernusa.org/story/ebola-myths-facts/

[8]Chou, Wen-Ying Sylvia, Oh, April, & Klein, William M. P. (2018). Addressing Health-Related Misinformation on Social Media. JAMA : The Journal of the American Medical Association, 320(23), 2417-2418.

[9]Islam, M. S., Sarkar, T., Khan, S. H., Kamal, A. M., Hasan, S. M., Kabir, A., . . . Seale, H. (2020). COVID-19–Related Infodemic and Its Impact on Public Health: A Global Social Media Analysis. The American Journal of Tropical Medicine and Hygiene,103(4), 1621-1629. doi:10.4269/ajtmh.20-0812

[10]Wu, Liang, Morstatter, Fred, Carley, Kathleen, & Liu, Huan. (2019). Misinformation in Social Media. SIGKDD Explorations, 21(2), 80-90.

[11]COVID-19 and misinformation: Is censorship of social media a remedy to the spread of medical misinformation? (2020). EMBO Reports, 21(11), E51420.

[12]Vogel, L. (2017). Viral misinformation threatens public health. Canadian Medical Association Journal (CMAJ), 189(50), E1567.

[13]Wu, Liang, Morstatter, Fred, Carley, Kathleen, & Liu, Huan. (2019). Misinformation in Social Media. SIGKDD Explorations, 21(2), 80-90.

[14]Vosoughi, Soroush, Roy, Deb, & Aral, Sinan. (2018). The spread of true and false news online. Science (American Association for the Advancement of Science), 359(6380), 1146-1151.

[15]Uscinski, Joseph E, Enders, Adam M, Klofstad, Casey, Seelig, Michelle, Funchion, John, Everett, Caleb, . . . Murthi, Manohar. (2020). Why do people believe COVID-19 conspiracy theories? Harvard Kennedy School Misinformation Review, Harvard Kennedy School Misinformation Review, 2020-04-28.

[16]Uscinski, Joseph E, Enders, Adam M, Klofstad, Casey, Seelig, Michelle, Funchion, John, Everett, Caleb, . . . Murthi, Manohar. (2020). Why do people believe COVID-19 conspiracy theories? Harvard Kennedy School Misinformation Review, Harvard Kennedy School Misinformation Review, 2020-04-28.

[17]Interview with Dr. Leesa Lin, Social and Behavioral Epidemiologist at the London School of Hygiene & Tropical Medicine [Online interview]. (2020, November 10).

[18]Liu, Li, Liu, Yue-Ping, Wang, Jing, An, Li-Wei, & Jiao, Jian-Mei. (2016). Use of a knowledge-attitude-behaviour education programme for Chinese adults undergoing maintenance haemodialysis: Randomized controlled trial. Journal of International Medical Research, 44(3), 557-568.

[19]Interview with Dr. Leesa Lin, Social and Behavioral Epidemiologist at the London School of Hygiene & Tropical Medicine [Online interview]. (2020, November 10).

[20]Janis, Irving L, & Mann, Leon. (1977). Emergency Decision Making: A Theoretical Analysis of Responses to Disaster Warnings. Journal of Human Stress, 3(2), 35-48.

[21]Interview with Dr. Leesa Lin, Social and Behavioral Epidemiologist at the London School of Hygiene & Tropical Medicine [Online interview]. (2020, November 10).

[22]Interview with Dr. Leesa Lin, Social and Behavioral Epidemiologist at the London School of Hygiene & Tropical Medicine [Online interview]. (2020, November 10).

[23]Ghebreyesus, T. (2020, February 15). Munich Security Conference. Speech. Retrieved December 2, 2020, from https://www.who.int/director-general/speeches/detail/munich-security-conference

[24]Spring, M. (2020, October 06). Trump Covid post deleted by Facebook and hidden by Twitter. Retrieved December 10, 2020, from https://www.bbc.com/news/technology-54440662

[25]COVID-19 and misinformation: Is censorship of social media a remedy to the spread of medical misinformation? (2020). EMBO Reports, 21(11), E51420.

[26] M. (2020, March 16). A joint industry statement on COVID-19 from Microsoft, Facebook, Google, LinkedIn, Reddit, Twitter and YouTube:. Retrieved December 16, 2020, from https://twitter.com/Microsoft/status/1239703041109942272?ref_src=twsrc%5Etfw%7Ctwcamp%5Etweetembed%7Ctwterm%5E1239703041109942272%7Ctwgr%5E%7Ctwcon%5Es1_&ref_url=https%3A%2F%2Fwww.bloomberg.com%2Fnews%2Farticles%2F2020-03-17%2Ffacebook-microsoft-google-team-up-against-virus-misinformation

[27]COVID-19 and misinformation: Is censorship of social media a remedy to the spread of medical misinformation? (2020). EMBO Reports, 21(11), E51420.

[28]COVID-19 and misinformation: Is censorship of social media a remedy to the spread of medical misinformation? (2020). EMBO Reports, 21(11), E51420.

[29]Islam, Md Saiful, Sarkar, Tonmoy, Khan, Sazzad Hossain, Mostofa Kamal, Abu-Hena, Hasan, S. M. Murshid, Kabir, Alamgir, . . . Seale, Holly. (2020). COVID-19–Related Infodemic and Its Impact on Public Health: A Global Social Media Analysis. The American Journal of Tropical Medicine and Hygiene, 103(4), 1621-1629.

[30] Islam, Md Saiful, Sarkar, Tonmoy, Khan, Sazzad Hossain, Mostofa Kamal, Abu-Hena, Hasan, S. M. Murshid, Kabir, Alamgir, . . . Seale, Holly. (2020). COVID-19–Related Infodemic and Its Impact on Public Health: A Global Social Media Analysis. The American Journal of Tropical Medicine and Hygiene, 103(4), 1621-1629.

BCPHR.org was designed by ComputerAlly.com.

Visit BCPHR‘s publisher, the Boston Congress of Public Health (BCPH).

Email [email protected] for more information.

Click below to make a tax-deductible donation supporting the educational initiatives of the Boston Congress of Public Health, publisher of BCPHR.![]()

© 2025-2026 Boston Congress of Public Health (BCPHR): An Academic, Peer-Reviewed Journal

All Boston Congress of Public Health (BCPH) branding and content, including logos, program and award names, and materials, are the property of BCPH and trademarked as such. BCPHR articles are published under Open Access license CC BY. All BCPHR branding falls under BCPH.

Use of BCPH content requires explicit, written permission.